Foreword

As an IT-person and Apple geek I started listening to the NosillaCast podcast by Allison Sheridan somewhere in the early '10s. It’s a podcast with a wide variety of tech topics with a slight Apple bias. Topics range from an interview of a blind person using an iPhone to an article on the security measures of a certain bank’s webapp to tutorials on how to fix a tech problem with one of your devices. I especially enjoyed the sections where Bart was explaining some technical topic. The podcasts kept me company on my long commute to and from work.

Somewhere in 2013 Bart announced he was starting a series of tutorials on the Terminal and all kinds of commands to teach Allison. Cool, let’s see how much I already know. The first sessions were easy. I knew most of what Bart was explaining, and yes, I was one of those persons yelling in the car when Bart quizzed Allison.

Soon I heard new things and quickly it became a game. What would Bart explain this time and would I know it or would it be new. I started to look forward to the commute time because that’s the time I would listen to the podcast. I even felt sad when Bart explained that episode 35 was the last one of the series. Luckily time proved him wrong and now the episodes kept coming.

When people at work expressed fear of Terminal commands, I would point them to the series on Bart’s website, allowing my co-workers to read his tutorials and listen to the podcast audio. I even added the most relevant episodes to the training we had for our new junior developers.

In 2016 I went to Dublin for a holiday and actually met Bart in real life. Turns out he’s a great guy and a kindred spirit. It’s his passion to teach people about the stuff he loves that makes him so valuable to the community. He combines that with academic precision to present well-researched information in bite-sized chunks that makes it easy to follow along, no matter what your skill level is.

I hope you get as much fun and knowledge out of this series as I did, now in an eBook.

Helma van der Linden

TTT fan

Preface

Taming the Terminal was created as a podcast and written tutorial with Bart Busschots as the instructor and Allison Sheridan as the student. Taming the Terminal started its life as part of the NosillaCast Apple Podcast and was eventually spun off as a standalone Podcast. Bart and Allison have been podcasting together for many years, and their friendship and camaraderie make the recordings a delightful way to learn these technical concepts. To our American readers, note that the text is written in British English so some words such as "instalment" may appear misspelled, but they are not.

The book version of the podcast was a labor of love by Allison Sheridan and Helma van der Linden as a surprise gift to Bart Busschots for all he has done for the community.

If you enjoy Taming the Terminal, you may also enjoy Bart and Allison’s second podcast entitled Programming By Stealth. This is a fortnightly podcast where Bart is teaching the audience to program, starting with HTML and CSS (that’s the stealthy part since it’s not proper programming), into JavaScript and beyond. As with Taming the Terminal, Bart creates fantastic written tutorials for Programming By Stealth, including challenges to cement the listener’s skills.

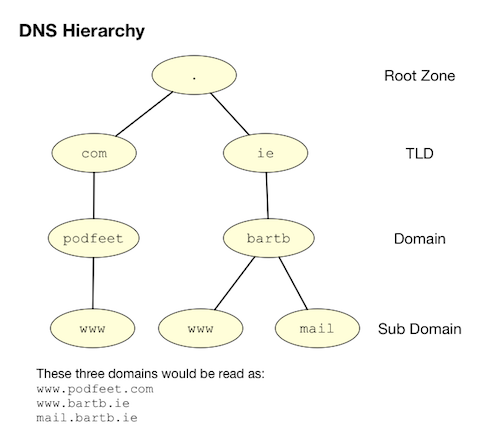

You can find Programming By Stealth and Taming the Terminal podcasts along with Allison’s other shows at podfeet.com/blog/subscribe-to-the-podcasts/.

Contributors to Taming the Terminal

Bart Busschots is the author of all of the written tutorials in the Taming the Terminal series so the lion’s share of the credit goes to him. Allison Sheridan is the student of the series asking the dumb questions, and she created the podcast. Steve Sheridan convinced Bart and Allison that instead of having the series buried inside the larger NosillaCast Podcast, that it should be a standalone podcast. He did all of the editing to pull out the audio for the 35 original episodes from the NosillaCast, top and tail with music, and pushed Bart and Allison to record the intros. Steve even created the Taming the Terminal logo.

Allison had a vision of Taming the Terminal becoming an eBook but had no idea how to accomplish this. Helma van der Linden figured out how to programmatically turn the original feature-rich set of HTML web pages into an ePub book as well as producing a PDF version, and even an HTML version. She managed the GitHub project and fixed the technical aspects of the book and kept Allison on task as she did the proofreading and editing of the entire book. Allison created the book cover as well.

Introduction

Taming the Terminal is specifically targeted at learning the macOS Terminal but most of the content is applicable to the Linux command line. If you’re on Windows, it is recommended that you use the Linux Subsystem for Windows to learn along with this book. Wherever practical, Bart explains the differences that you may encounter if you’re not on macOS.

The series started in April 2013 and was essentially complete in 2015 after 35 of n lessons, but Bart carefully labeled them as "of n" because he knew that over time there likely would be new episodes. More episodes have indeed come out, and this book will be updated over time as the new instalments are released.

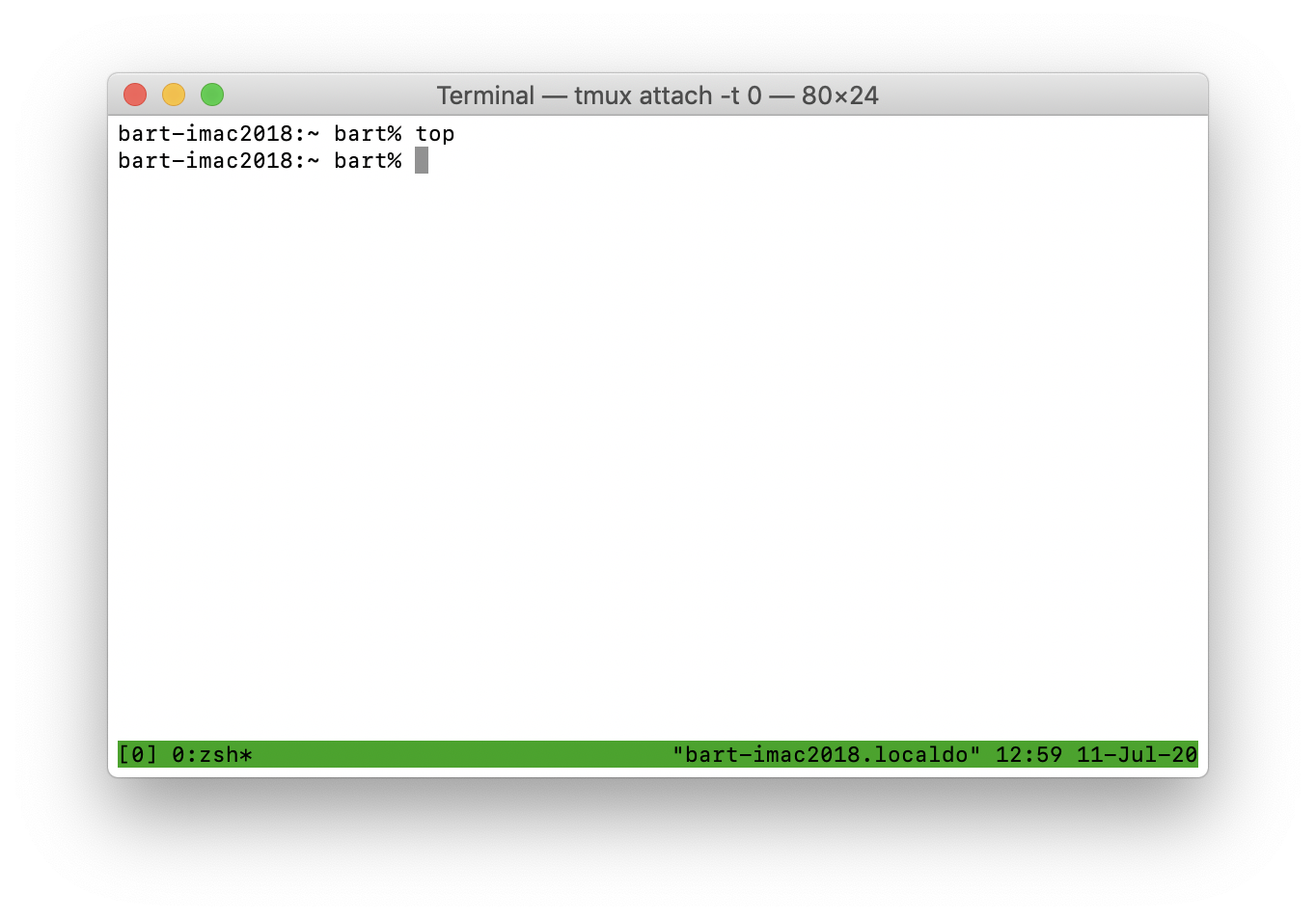

Zsh vs Bash

In macOS Catalina, released after much of the tutorial content in this book was released, Apple replaced the default shell bash with the zsh shell. As a result you’ll notice the prompt change from $ to % partway through the book. There may be cases where the instructions given during the bash days might not work with today’s zsh.

To switch back to bash if you do run into problems, simply enter:

bash --loginYou’ll be shown this warning explaining how to switch your default interactive shell back to zsh, and can proceed with the lessons.

The default interactive shell is now zsh.

To update your account to use zsh, please run `chsh -s /bin/zsh`.

For more details, please visit https://support.apple.com/kb/HT208050.If you’d like to see these instalments in their web form, you can go to ttt.bartificer.net.

If you enjoy the format of this series, you might also enjoy the podcast and written tutorials for Bart and Allison’s next series, Programming By Stealth at pbs.bartificer.net.

Feedback on the book can be sent to allison@podfeet.com.

We hope you enjoy your journey with Taming the Terminal.

Full Disk Access

Starting with macOS 10.14 Mojave, Apple added privacy controls that prevent apps from accessing your entire disk without explicit permission. This series assumes the Terminal app has been granted either Files and Folders or Full Disk Access permission under Privacy & Security in the macOS settings.

TTT Part 1 of n — Command Shells

I have no idea whether or not this idea is going to work out, but on this week’s Chit Chat Across the Pond audio podcast segment on the NosillaCast Apple Podcast (to be released Sunday evening PST), I’m going to try to start what will hopefully be an on-going series of short unintimidating segments to gently introduce Mac users to the power contained within the OS X Terminal app.

Note: this entire series was later moved to a standalone podcast called Taming the Terminal at podfeet.com/….

I’m on with Allison every second week, and I’ll have other topics to talk about, so the most frequent the instalments in this series could be would be biweekly, but I think they’ll turn out to be closer to monthly on average. While the focus will be on OS X, the majority of the content will be equally applicable to any other Unix or Linux operating system.

In the last CCATP, we did a very detailed segment on email security, and despite the fact that with the benefit of hindsight, I realise it was too much to do at once and should have been split into two segments, it received the strongest listener response of anything of any of my many contributions to the NosillaCast in the last 5 or more years. I hope I’m right in interpreting that as evidence that there are a lot of NosillaCast listeners who want to get a little more technical and get their hands dirty with some good old-fashioned nerdery!

The basics

In this first segment, I just want to lay a very basic foundation. I plan to take things very slowly with this series, so I’m going to start the way I mean to continue. Let’s start with some history and some wider context.

Before the days of GUIs (Graphical User Interfaces), and even before the days of simple menu-driven non-graphical interfaces like the original Word Perfect on DOS, the way humans interacted with computers was through a “command shell”. Computers couldn’t (and still can’t) speak or interpret human languages properly, and humans find it very hard to speak in native computer languages like binary machine codes (though early programmers did actually have to do that). New languages were invented to help bridge the gap, and allow humans and computers to meet somewhere in the middle.

The really big problem is that computers have absolutely no intelligence, so they can’t deal with ambiguity at all. Command shells use commands that look Englishy, but they have very rigid structures (or grammars if you will) that remove all ambiguity. It’s this rigid structure that allows the automated translation from shell commands to binary machine code the computer can execute.

When using any command shell, the single most important thing to remember is that computers are absolutely stupid, so they will do EXACTLY what you tell them to, no matter how silly the command you give them. If you tell a computer to delete all the files on a hard drive, it will, because, well, that’s what you asked it to do! Another important effect of computers’ total lack of intelligence is that there is no such thing as “close enough” — if you give it a command that’s nearly valid, a computer can no more execute it than if you’d just mashed the keyboard with your face. Nearly right and absolute gibberish are just as unintelligible to a computer. You must be exact, and you must be explicit at all times.

No wonder we went on to invent the GUI, this command shell malarky sounds really complicated! There is no doubt that if the GUI hadn’t been invented the personal computer wouldn’t have taken off like it has. If it wasn’t for the GUI, there’s no way there would be more computers than people in my parents home (two of them, and including iPhones and tablets, 6 computers!) Even the nerdiest of nerds use GUI operating systems most of the time because they make a lot of things both easier and more pleasant. BUT — not everything.

We all know that a picture says a thousand words, but when you are using a GUI it’s the computer that is showing you a picture, all you get to do is make crude hand gestures at the computer, which I’d say is worth about a thousandth of a word — so, a single shell command can easily be worth a thousand clicks. This is why all desktop OSes still have command shells built in — not as their ONLY user interface like in times past, but as a window within the GUI environment that lets you communicate with the computer using the power of a command shell.

Even Microsoft understands the power of the command shell. DOS may be dead, but the new Windows Power Shell is giving Windows power users a new, more modern, and more powerful command shell than ever before. Windows 8 may have removed the Start menu, but Powershell is still there! All Linux and Unix distros have command shells, and OS X gives you access to an array of different Command Shells through Terminal.app.

Just like there is no one GUI interface, there is no one command shell. Also, just like most GUIs are at least somewhat similar to each other, they all use icons for example, most command shells are also quite similar, having a command prompt that accepts commands with arguments to supply input to the commands or alter their behaviour. OS X does not ship with one command shell, it ships with SIX (sh, bash, zsh, csh, tcsh, and ksh)!

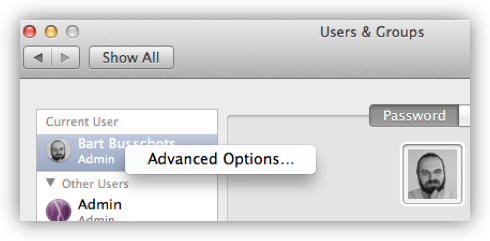

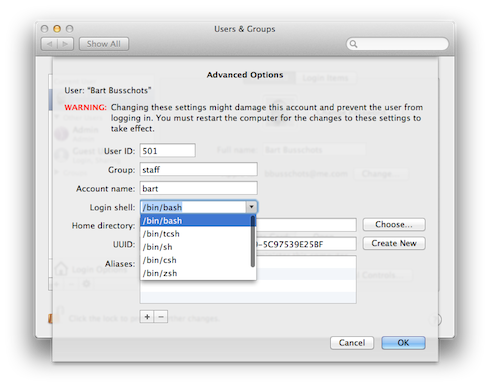

You can see the list of available shells (and set your default shell) by opening System Preferences, going to the Users & Groups pref pane, unlocking it, then right-clicking on your username in the sidebar, and selecting Advanced Options ...:

The default shell on OS X is the Bourne Again Shell (Bash), so that’s the shell we’ll be using for the remainder of this series. If you’ve not altered the defaults, then whenever you open a Terminal window on OS X, what you’re presented with is a Bash command shell. Bash is an updated and improved version of the older Bourne Shell (sh), which was the default shell in Unix for many years. The original Bourne Shell dates all the way back to 1977 and is called after its creator, Stephen Bourne. The Bourne Again Shell is a ‘newer’ update to the Bourne Shell dating back to 1989, the name being a nerdy religious joke by its author, Brian Fox. The Bourne Again Shell was not the last in the line of shells tracing their origins to the Bourne Shell, there is also zsh which dates back to 1990, but despite being a more powerful shell, it hasn’t taken off like Bash has.

So, what does a shell do?

Does it just let you enter a single command and then run it?

Or is there more to it?

Unsurprisingly, there’s a lot more to it!

The shell does its own pre-processing before issuing a command for you, so a lot of things that we think of as being part of how a command works are actually features provided by the command shell.

The best example is the almost ubiquitous * symbol.

When you issue a command like chmod 755 *.php, the actual command is not given *.php as a single argument that it must then interpret. No, the * is interpreted and processed by the shell before being passed on to the chmod command.

It’s the shell that goes and looks for all the files in the current folder that end in .php, and replaces the *.php bit of the command with a list of all the actual files that end in .php, and passes that list on to the chmod command.

As well as providing wildcard substitution (the * thing), almost all shells also provide ‘plumbing’ for routing command inputs and outputs between commands and files, the definition of variables to allow sequences of commands to be generalised in a more reusable way, simple programming constructs to enable conditional actions, looping, and the grouping of sequences of commands into named functions, and the execution of a sequence of commands inside a file (scripting), and much more. Different shells also provide their own custom features to help make life at the command prompt easier for users. My favourite is tab-completion which is the single best thing Bash has to offer over sh in my opinion. OS X also brings some unique features to the table, with superb integration between the GUI and the command shell through features like drag-and-drop support in Terminal.app and shell scripting support in Automator.app. Of all the OSes I’ve used, OS X is the one that makes it the easiest to integrate command-line programs into the GUI.

I’ll end today by explaining an important part of the Unix philosophy, a part that’s still very much alive and well within OS X today. Unix aims to provide a suite of many simple command-line tools that each do just one thing, but do it very well. Complex tasks can then be achieved by chaining these simple commands together using a powerful command shell. Each Unix/Linux command-line program can be seen as a lego brick — not all that exciting on their own, but using a bunch of them, you can build fantastic things! My hope for this series is to help readers and listeners like you develop the skills to build your own fantastic things to make your computing lives easier. Ultimately the goal is to help you create more and better things by using automation to free you from as many of your repetitive tasks as possible!

TTT Part 2 of n — Commands

This is the second instalment of an ongoing series. In the first instalment, I tried to give you a sort of 40,000-foot view of command shells — some context, some history, a very general description of what command shells do, and a little bit on why they are still very useful in the modern GUI age. The most important points to remember from last time are that command shells execute commands, that there are lots of different command shells on lots of different OSes, but that we will be focusing on Bash on Linux/Unix in general, and Bash on OS X in particular. The vast majority of topics I plan to discuss in these segments will be applicable on any system that runs Bash, but, the screenshots I use will be from OS X, and some of the cooler stuff will be OS X only. This segment, like all the others, will be used as part of my bi-weekly Chit Chat Across The Pond (CCATP) audio podcast segment with Allison Sheridan on podfeet.com/…

Last time I focused on the shell and avoided getting in any way specific about the actual commands that we will be executing within the Bash shell. I thought it was very important to make as clear a distinction between command shells and commands as possible, so I split the two concepts into two separate segments. Having focused on command shells last time, this instalment will focus on the anatomy of a command but will start with a quick intro to the Terminal app in OS X first.

Introducing the Terminal Window

You’ll find Terminal.app in /Applications/Utilities.

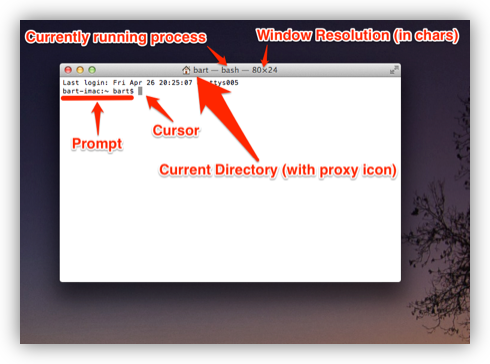

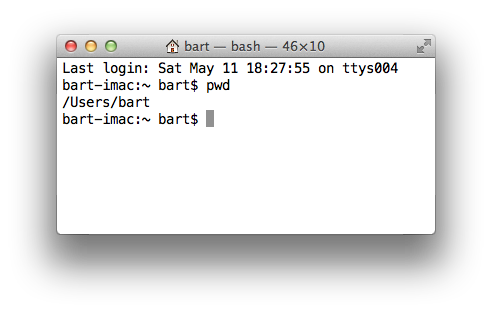

Unless you’ve changed some of the default settings (or are using a very old version of OS X), you will now see a white window that is running the bash command shell that looks something like this:

Let’s start at the very top of the window with its title bar. At the left of the title is a proxy icon representing the current directory for the current Bash command shell and beside it the name of that folder. (Note that directory is the Unix/Linux/DOS word for what OS X and Windows refers to as a folder.) Like Finder windows, Terminal sessions are always “in” a particular directory/folder. After the current directory will be a dash, followed by the name of the process currently running in the Terminal session (in our case, a bash shell). The current process is followed by another dash, and then the dimensions of the window in fixed-width characters.

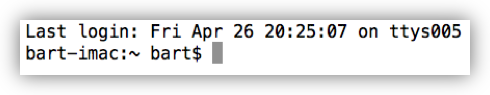

Within the window itself you will likely see a line of output telling you when you last logged in, and from where (if it was on this computer it will say ttys followed by some number, if it was from another computer, it will give the computer’s hostname). This will be followed on the next line by the so-called command prompt, and then the input cursor.

Let’s have a closer look at the command prompt. As with almost everything in Linux/Unix, the prompt is entirely customisable, so although Bash is the default shell on lots of different operating systems, the default prompt on each of those systems can be different. Let’s first look at the default Bash command prompt on OS X:

On OS X, the prompt takes the following form:

hostname:current_directory username$

First, you have the hostname of the computer on which the command shell is running (defined in ). This might seem superfluous, but it becomes exceptionally useful once you start using ssh to log in to other computers via the Terminal.

The hostname is followed by a : and then the command shell’s current directory (note that ~ is short-hand for “the current user’s home folder”, more on this next time).

The current directory is followed by a space, and then the Unix username of the user running the command shell (defined when you created your OS X account, defaults to your first name if available).

Finally, there is a $ character (which changes to a # when you run bash as the root user).

Again, this might not seem very useful at first, but there are many reasons you may want to switch your command shell to run as a different user from time to time, so it is also very useful information.

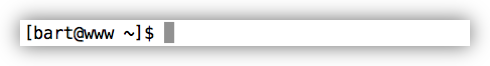

As an example of how the default shells differ on different operating systems, below is an example from a RedHat-style Linux distribution (CentOS in this case):

As you can see, it contains the same information, but arranged a little differently:

[username@hostname current_directory]$

Finally, Debian-style Linux distributions like Ubuntu use a different default prompt again, but also showing the same information:

username@hostname:current_directory$

Handy Tip: if you find the text in the Terminal window to small to read, you can make it bigger or smaller with ⌘++ or ⌘+-. This will affect just your current Terminal window. You can permanently change the default by editing the default profile in .

The Anatomy of a Command

Now that we understand the different parts of our Terminal window, let’s have a look at the structure of the actual commands we will be typing at that cursor!

I want to start by stressing that the commands executed by a command shell are not determined by the command shell, but by the operating system. Regardless of whether you use Bash on OS X, or zsh on OS X, you will have to enter OS X commands. Similarly, if you use Bash on Linux, you will have to enter Linux commands. Thankfully Linux and Unix agree almost entirely on the structure of their basic commands, so with a very few (and very annoying) exceptions, you can use the same basic commands on any Linux or Unix distribution (remember that at its heart OS X is Free BSD Unix).

Commands take the form of the command itself optionally followed by a list of arguments separated by spaces, e.g.:

command argument_1 argument_2 … argument_n

Arguments are a mechanism for passing information to a command. Most commands need at least one argument to be able to perform their task, but some don’t. Both commands and arguments are case-sensitive, so beware your capitalisation!

For example, the cd (change directory) command takes one argument (a directory path):

bart-imac:~ bart$ cd /Users/Shared/

bart-imac:Shared bart$In this example, the command is cd, and the one argument passed is /Users/Shared/.

Some commands don’t require any arguments at all, e.g.

the pwd (present working directory) command:

bart-imac:~ bart$ pwd

/Users/bart

bart-imac:~ bart$It is up to each command to determine how it will process the arguments it is given. When the developer was creating the command he or she will have had to make decisions about what arguments are compulsory, what arguments are optional, and how to parse the list of arguments the command is given by the shell when being executed.

In theory, every developer could come up with their own mad complex scheme for parsing argument lists, but in reality most developers loath re-inventing the wheel (thank goodness), so a small number of standard libraries have come into use for parsing arguments. This means that many apps use very similar argument styles.

As well as accepting simple arguments like the cd command above, many apps accept specially formatted arguments referred to as flags.

Flags are usually used to specify optional extra information, with information that is required taken as simple arguments.

Flags are arguments (or pairs of arguments) that start with the - symbol.

The simplest kinds of flags are those that don’t take a value, they are specified using a single argument consisting of a - sign followed by a single letter.

For example, the ls (list directory) command can accept the flag -l (long-form listing) as an argument.

e.g.

bart-imac:Shared bart$ ls -l

total 632

drwxrwxrwx 3 root wheel 102 5 Dec 2010 Adobe

drwxrwxrwx 3 bart wheel 102 27 Mar 2012 Library

drwxrwxrwx@ 5 bart wheel 170 28 Dec 21:24 SC Info

drwxr-xr-x 4 bart wheel 136 22 Feb 21:42 cfx collagepro

bart-imac:Shared bart$The way the standard argument processing libraries work, flags can generally be specified in an arbitrary order.

The ls command also accepts the flag -a (list all), so the following are both valid and equivalent:

bart-imac:Shared bart$ ls -l -aand

bart-imac:Shared bart$ ls -a -lThe standard libraries also allow flags that don’t specify values to be compressed into a single argument like so:

bart-imac:Shared bart$ ls -alSometimes flags need to accept a value, in which case the flag stretches over two arguments which have to be contiguous.

For example, the ssh (secure shell) command allows the port to be used for the connection to be specified with the -p flag, and the username to connect as with the -l flag, e.g.:

bart-imac:Shared bart$ ssh bw-server.localdomain -l bart -p 443These single-letter flags work great for simple commands that don’t have too many options, but more complex commands often support many tens of optional flags.

For that reason, another commonly used argument processing library came into use that accepts long-form flags that start with a -- instead of a single -.

As well as allowing a command to support more flags, these longer form flags also allow values to be set within a single argument by using the = sign.

As an example, the mysql command (needs to be installed separately on OS X) allows the username and password to be used when making a database connection to be specified using long-form flags:

...$ mysql --username=bart --password=open123 example_databaseMany commands support both long and short form arguments, and they can be used together, e.g.:

...$ mysql --username=bart --password=open123 example_database -vSo far we know that commands consist of a command optionally followed by a list of arguments separated by spaces, and that many Unix/Linux commands use similar schemes for processing arguments where arguments starting with - or -- are treated in a special way, and referred to as flags.

That all seems very simple, but, there is one important complication that we have to address before finishing up for this segment, and that’s special characters.

Within Bash (and indeed every other command shell), there are some characters that have a special meaning, so they cannot be used in commands or arguments without signifying to the command shell in some way that it should interpret these symbols as literal symbols, and not as representations of some sort of special value or function.

The most obvious example from what we have learned today is the space character, it is used as the separator between commands and the argument list that follows, and within that argument list as the separator between individual arguments. What if we want to pass some text that contains a space to a command as an argument? This happens a lot because spaces are valid characters within file and folder names on Unix and Linux, and file and folder names are often passed as arguments.

As well as the space there are other symbols that have special meanings. I won’t explain what they mean today, but I will list them:

-

space

-

# -

; -

" -

' -

` -

\ -

! -

$ -

( -

) -

& -

< -

> -

|

You have two choices for how you deal with these special characters when you need to include them within an argument, you can escape each individual special character within the argument, or you can quote the entire argument.

Escaping is easy, you simply prefix the special character in question with a \.

If there are only one or two special characters in an argument this is the simplest and easiest solution.

But, it can become tedious if there are many such special characters.

Let’s use the echo command to illustrate escaping.

The echo command simply prints out the input it receives.

The following example passes the phrase Hello World! to the echo command as a single argument.

Note that this phrase contains two special characters that will need to be escaped, the space and the !:

bart-imac:~ bart$ echo Hello\ World\!

Hello World!

bart-imac:~ bart$If you don’t want to escape each special character in an argument, you can quote the argument by prepending and appending either a " or a ' symbol to it.

There is a subtle difference between using ' or ".

When you quote with ' you are doing so-called full quoting, every special character can be used inside a full quote, but, it is impossible to use a ' character inside a fully quoted argument.

For example:

bart-imac:~ bart$ echo '# ;"\!$()&<>|'

# ;"\!$()&<>|

bart-imac:~ bart$When you quote with " on the other hand you are doing so-called partial quoting, which means you can use most special characters without escaping them, but not all.

Partial quoting will become very important later when we start to use variables and things because the biggest difference between full and partial quoting is that you can’t use variable substitution with full quoting, but you can with partial quoting (don’t worry if that makes no sense at the moment, it will later in the series).

When using partial quoting you still have to escape the following special characters:

-

" -

` -

\ -

$

For example:

bart-imac:~ bart$ echo "# ;\!()&<>|"

# ;\!()&<>|

bart-imac:~ bart$and:

bart-imac:~ bart$ echo "\\ \$ \" \`"

\ $ " `

bart-imac:~ bart$There are a few other peculiar edge cases with partial quoting — for example, you can’t end a partial quote with a !, and you can’t quote just a * on its own (there may well be more edge cases I haven’t bumped into yet).

That’s where we’ll leave it for this segment. We’ve now familiarised ourselves with the OS X Terminal window, and we’ve described the anatomy of a Unix/Linux command. In the next segment, we’ll look at the Unix/Linux file system, and at some of the commands used to navigate around it.

TTT Part 3 of n — File Systems

This is the third instalment of an on-going series. These blog posts are only part of the series, they are actually the side-show, being effectively just my show notes for discussions with Allison Sheridan on my bi-weekly Chit Chat Across the Pond audio podcast on podfeet.com/…. This instalment will be featured in NosillaCast episode 418 (scheduled for release late on Sunday the 12th of May 2013).

In the first instalment, we started with the 40,000ft view, looking at what command shells are, and why they’re still relevant in today’s GUI-dominated world. In the second instalment we looked at OS X’s Terminal.app, the anatomy of the Bash command prompt, and the anatomy of a Unix/Linux command. This time we’ll be looking at the anatomy of file systems in general, and the Unix/Linux file system in particular, and how it differs from the Windows/DOS file system many of us grew up using.

File systems

Physical storage media are nothing more than a massive array of virtual pigeon holes, each of which can hold a single 1 or 0. All your information is stored by grouping together a whole bunch of these pigeon holes and giving that grouping of 1s and 0s some kind of name. Humans simply could not deal with remembering that the essay they were working on is stored in sectors 4 to 1024 on cylinder 213 on the disk connected to the first SATA channel on the motherboard. We need some kind of abstraction to bring order to the chaos and to allow us to organise our data in a human-friendly way.

A good analogy would be a pre-computer office where the unit of storage was a single sheet of paper. Without some sort of logical system for organising all this paper, no one would ever be able to find anything, hence, in the real world, we developed ‘systems’ for ‘filing’ paper. Or, to put it another way, we invented physical filesystems, based around different ways of grouping and naming the pieces of paper. If a single document contained so much information that it ran over multiple pages, those piece of paper were physically attached to each other using a tie, a paperclip, or a staple. To be able to recognise a given document at a glance, documents were given titles. Related documents were then put together into holders that, for some reason were generally green, and those holders were then placed into cabinets with rails designed to hold the green holders in an organised way. I.e. we had filing cabinets containing folders which contained files. The exact organisation of the files and folders were up to the individual clerks who managed the data and were dependant on the kind of data being stored. Doctors tend to store files alphabetically by surname, while libraries love the Dewey Decimal system.

When it comes to computers, the job of bringing order to the chaos falls to our operating systems. We call the many different schemes that have been devised to provide that order, filesystems. Some filesystems are media dependent, while others are operating system dependent. E.g. the Joliet file system is used on CDs and DVDs regardless of OS, while FAT and NTFS are Windows filesystems, EXT is a family of Linux file systems, and HFS+ is a Mac file system.

There is an infinite number of possible ways computer scientists could have chosen to bring order to the chaos of bits on our various media, but, as is often the case, a single real-world analogy was settled on by just about all operating system authors. Whether you use Linux, Windows, or OS X, you live in a world of filesystems that contain folders (AKA directories) that contain files and folders. Each folder and file in this recursive hierarchical structure has a name, so it allows us humans, to keep our digital documents organised in a way that we can get our heads around. Although all our modern filesystems have their own little quirks under the hood, they all share the same simple architecture, your data goes in files which go in folders which can go in other folders which eventually go into file systems.

You can have lots of files with the same name in this kind of file system, but, you can never have two items with the same name in the same folder.

This means that each file and folder can be uniquely identified by listing all the folders you pass to get from the so-called ‘root’ of the filesystem as far as the file or folder you are describing.

This is what we call the full path to a file or folder.

Where operating systems diverge is in their choice of separator, and in the rules they impose on file and folder names.

On all modern consumer operating systems, we write file paths as a list of folder and file names separated by some character, called the ‘path separator’.

DOS and Windows use \ (the backslash) as the path separator, on classic MacOS it was : (old OS X apps that use Carbon instead of Cocoa still use : when showing file paths, iTunes did this up until the recent version 11!), and on Linux/Unix (including OS X), / (the forward-slash) is used.

A single floppy disk and a single CD or DVD contain a single file system to hold all the data on a given disk, but that’s not true for hard drives, thumb drives, or networks. When formatting our hard drives or thumb drives we can choose to sub-divide a single physical device into multiple so-called partitions, each of which will then contain a single filesystem.

You’ve probably guessed by now that on our modern computers we tend to have more than one filesystem. Even if we only have one internal hard disk in our computer that has been formatted to have only a single partition, every CD, DVD, or thumb drive we own contains a filesystem, and, each network share we connect to is seen by our OS as yet another file system. In fact, we can even choose to store an entire filesystem (even an encrypted one) in a single file, e.g. DMG files, or TrueCrypt vaults.

So, all operating systems have to merge lots of file systems into a single over-arching namespace for their users. Or, put another way, even if two files have identical paths on two filesystems mounted by the OS at the same time, there has to be a way to distinguish them from each other. There are lots of different ways you could combine multiple filesystems into a single unified namespace, and this is where the DOS/Windows designers parted ways with the Unix/Linux folks. Microsoft combines multiple file systems together in a very different way to Unix/Linux/OS X.

Let’s start by looking at the approach Microsoft chose.

In DOS, and later Windows, each filesystem is presented to the user as a separate island of data named with a single letter, referred to as a drive letter.

This approach has an obvious limitation, you can only have 26 file systems in use at any one time!

For historical reasons, A:\ and B:\ were reserved for floppy drives, so, the first partition on the hard drive connected to the first IDE/SATA bus on the motherboard is given the drive letter C:\, the second one D:\ and so on.

Whenever you plug in a USB thumb drive or a memory card from a camera it gets ‘mounted’ on the next free drive letter.

Network shares also get mounted to drive letters.

Just like files and folders, filesystems themselves have names too, often referred to as Volume Names. Windows makes very little use of these volume names though, they don’t show up in file paths, but, Windows Explorer will show them in some situations to help you figure out which of your USB hard drives ended up as K:\ today.

An analogy you can use for file systems is that of a tree. The trunk of the tree is the base of the file system, each branch is a folder, and each leaf a file. Branches ‘contain’ branches and leaves, just like folders contain folders and files. If you bring that analogy to Microsoft’s way of handling filesystems, then the global namespace is not a single tree, but a small copse of between 1 and 26 trees, each a separate entity, and each named with a single letter.

If we continue this analogy, Linux/Unix doesn’t plant a little copse of separate trees like DOS/Windows, instead, they construct one massive Franken-tree by grafting smaller trees onto the branches of a single all-containing master tree.

When Linux/Unix boots, one filesystem is considered to be the main filesystem and used as the master file system into which other file systems get inserted as folders.

In OS X parlance, we call the partition containing this master file system the System Disk.

Because the system disk becomes the root of the entire filesystem it is gets assigned the shortest possible file path, /.

If your system disk’s file system contained just two folders, folder_1 and folder_2, they would get the file paths /folder_1/ and /folder_2/ in Linux/Unix/OS X.

The Unix/Linux command mount can then be used to ‘graft’ filesystems into the master filesystem using any empty folder as the so-called mount point.

On Linux systems, it’s common practice to keep home folders on a separate partition, and to then mount that separate partition’s file system as /home/.

This means that the main filesystem has an empty folder in it called home and that as the computer boots, the OS mounts a specified partition’s file system into that folder.

A folder at the root of the that partition’s file system called just allison would then become /home/allison/.

On regular Linux/Unix distributions the file /etc/fstab (file system table) tells the OS what filesystems to mount to what mount points.

A basic version of this file will be created by the installer, but in the past, whenever you added a new disk to a Linux/Unix system you had to manually edit this file.

Thankfully, we now have something called automount to automatically mount any readable filesystems to a predefined location on the filesystem when they are connected.

The exact details will change from OS to OS, but on Ubuntu, the folder /media/ is used to hold mount points for any file system you connect to the computer.

Unlike Windows, most Linux/Unix systems make use of filesystems’ volume names and use them to give the mount points sensible names, rather than random letters.

If I connect a USB drive containing a single partition with a filesystem with the volume name Allison_Pen_Drive, Ubuntu will automatically mount the filesystem on that thumb drive when you plug it in, using the mount point /media/Allison_Pen_Drive/.

If that pen drive contained a single folder called myFolder containing a single file called myFile.txt, then myFile.txt would be added to the filesystem as /media/Allison_Pen_Drive/myFolder/myFile.txt.

Having the ability to mount any filesystem as any folder within a single master filesystem allows you to easily separate different parts of your OS across different drives.

This is very useful if you are a Linux/Unix sysadmin or power user, but it can really confuse regular users.

Because of this, OS X took a simpler route.

There is no /etc/fstab by default (though if you create one OS X will correctly execute it as it boots).

The OS X installer does not allow you to split OS X over multiple partitions. Everything belonging to the OS X system, including all the users home folders, are installed on a single partition, the system disk, and all other file systems, be they internal, external, network, or disk images, get automatically mounted in /Volumes/ as folders named for the file systems’ volume labels.

Going back to our imaginary thumb drive called Allison_Pen_Drive (which Ubuntu would mount as /media/Allison_Pen_Drive/), OS X will mount that as /Volumes/Allison_Pen_Drive/ when you plug it in.

If you had a second partition, or a second internal drive, called, say, Fatso (a little in-joke for Allison), OS X would mount that as /Volumes/Fatso/.

Likewise, if you double-clicked on a DMG file you downloaded from the net, say with the Adium installer, OS X would mount that as something like /Volumes/Adium/ until you eject the DMG.

The ‘disks’ listed in the Finder sidebar in the section headed Devices are just links to the contents of /Volumes/.

You can see this for yourself by opening a Finder Window and either hitting the key-combo ⌘+shift+g, or navigating to in the menubar to bring up the Go To Folder text box, and then typing the path /Volumes and hitting return.

OS X’s greatly simplified handling of mount points definitely makes OS X less confusing, but, the simplicity comes at a price. If you DO want to do more complicated things like having your home folders on a separate partition, you are stepping outside of what Apple considers the norm, and into a world of pain. On Linux/Unix separating out home folders is trivial, on OS X it’s a mine-field!

We’ll leave it here, for now, next time we’ll learn how to navigate around a Unix/Linux/OS X filesystem.

TTT Part 4 of n — Navigation

In the previous segment, we discussed the concept of a file system in detail.

We described how filesystems contain folders which contain files or folders, and we

described the different ways in which Windows and Linux/Unix/OS X combine all the

filesystems on our computers into a single name-space, within which every file has a

unique ‘path’ (F:\myFolder\myFile.txt -v- /Volumes/myThumbDrive/myFolder/myFile.txt).

In this instalment, we’ll look at how to navigate around the Unix/Linux/OS X filesystem in a Bash command shell.

Navigating around

Two instalments ago we learned that, just like a Finder window, a command prompt is ‘in’ a single folder/directory at any time.

That folder is known as the current working directory or the present working directory.

Although the default Bash command prompt on OS X will show us the name of our current folder, it doesn’t show us the full path.

To see the full path of the folder you are currently in, you need the pwd (present working directory) command.

This is a very simple command that doesn’t need any arguments.

When you open an OS X Terminal, by default your current working directory will be your home directory, so, if you open a Terminal now and type just pwd you’ll see something like:

Knowing where you are is one thing, the next thing you might want to do is look around to see what’s around you, and for that, you’ll need the ls (list) command.

If you run the command without any arguments you’ll see a listing of all the visible files and folders in the current directory. On OS X, this default view is annoying in two ways. Firstly, you’ll see your files and folders spread out over multiple columns, so scanning for a file name alphabetically becomes annoyingly confusing, especially if the list scrolls. Secondly, on OS X (though not on most Linux distros), you won’t be able to tell what is a file and what is a folder at a glance, you’ll just see names, which is really dumb (even DOS does a better job by default!).

You can force ls to display the contents of a folder in a single column in two ways.

You can either use the -l flag to request a long-form listing, showing lots of metadata along with each file name, or, you can use the -1 flag to specify that you just want the names but in a single column.

For now, most of the metadata shown in the long-form listing is just confusing garbage, so you are probably better off using -1.

If you do want to use the long-form listing, I suggest adding the -h flag to convert the file size column to human-readable file sizes like 100K, 5M, and 64G.

I’ve trained myself to always use ls -lh and never to use just ls -l.

You have two options for making it easy to distinguish files from folders in the output from ls on OS X.

You can either use the -F flag to append a / to the end of every folder’s name, or, the -G flag to use colour outputs (folders will be in blue).

The -F flag will work on Linux and Unix, but the -G flag is a BSD Unix thing and doesn’t work on Linux.

Linux users need to use the more logical --color instead.

I said that ls shows you all the visible files in your current directory, what if you want to see all the files, including hidden files?

Simple, just use the -a flag.

Finally, before we move away from ls (for now), I should mention that you can use ls to show you the content of any folder, not just the content of your current folder.

To show the content of any folder or folders, use the path(s) as regular arguments to ls.

E.g.

to see what is in your system-level library folder you can run:

ls -1G /LibraryNow that we can see where we are with pwd, and look around us with ls, the next obvious step is moving around the filesystem, but, we need to take a small detour before we’re ready to talk about that.

In the last instalment, we talked about file paths like the imaginary file on Allison’s thumb drive with the path /Volumes/Allison_Pen_Drive/myFolder/myFile.txt.

That type of path is called an absolute path and is one of two types of path you can use as arguments to Linux/Unix commands.

Absolute paths (AKA full paths) are like full addresses, or phone numbers starting with the + symbol, they describe the location of a file without reference to anything but the root of the filesystem.

They will work no matter what your present working directory is.

When you need to be explicit, like say when you’re doing shell scripting, you probably want to use absolute paths, but, they can be tediously long and unwieldy.

This is where relative paths come in, relative paths don’t describe where a file or folder is relative to the root of the file system, but, instead, relative to your present working directory.

If you are stopped for directions and someone wants to know where the nearest gas station is, you don’t give them the full address, you give them directions relative to where they are at that moment.

Similarly, if you want to phone someone in the same street you don’t dial + then the country code then the area code then their number, you just dial the number because, like your command shell is in a current working directory, your telephone is in an area code.

With phone numbers, you can tell whether something is a relative or an absolute phone number by whether or not it starts with a +.

With Unix/Linux paths the magic character is /.

Any path that starts with a / will be interpreted as an absolute path by the OS, and conversely, any path that does not begin with a / will be interpreted as a relative path.

If you are in your home folder, you can describe the relative path to your iTunes library file as Music/iTunes/iTunes\ Library.xml (note the backslash to escape the space in the path).

That means that your home folder contains a folder called Music, which contains a folder called iTunes, which contains a file called iTunes Library.xml.

Describing relative paths to items deeper in the file system hierarchy from you is easy, but what if you need to go the other way, not to folders contained in your current folder, but instead to the folders that contain your current folder?

Have another look at the output of ls -aG1 in any folder.

What are the top two entries?

I don’t have to know what folder you are in to know the answer, the first entry will be a folder called ., and the second entry will be a folder called .. which are the key to allowing relative paths that go up the chain.

The folder . is a hard link to the current folder.

If you are in your home folder, ls ./Documents and ls Documents will do the same thing, show you the contents of a folder called Documents in your current folder.

This seems pointless, but trust me, it will prove to be important and useful in the future.

For now, the more interesting folder is .., which is a hard link to the folder that contains the current folder.

I.e.

it allows you to specify relative paths that move back towards / from where you are.

In OS X, home directories are stored in a folder called /Users.

As well as one folder for each user (named for the user), /Users also contains a folder called Shared which is accessible by every user to facilitate easy local file sharing.

Regardless of your username, the relative path from your home folder to /Users/Shared is always ../Shared (unless you moved your home folder to a non-standard location of course).

.. means go back one level, then move forward to Shared.

You can go back as many levels as you want until you hit / (where .. is hard-linked to itself), e.g.

the relative path from your home folder to / is ../../.

Finally, the Bash shell (and all other common Unix/Linux shells) provides one other very special type of path, home folders.

We have mentioned in passing in previous instalments that ~ means ‘your home directory’.

No matter where on the filesystem you are, ~/Music/iTunes/iTunes\ Library.xml is always a relative path to your iTunes library file.

But, the ~ character does a little more than that, it can take you to ANY user’s home folder simply by putting their username after the ~.

Imagine Allison & Steve share a computer.

Allison’s username is allison, and Steve’s is steve.

Allison and Steve can each access their own iTunes libraries at ~/Music/iTunes/iTunes\ Library.xml, but, Allison can also access Steve’s at ~steve/Music/iTunes/iTunes\ Library.xml, and likewise, Steve can access Allison’s at ~allison/Music/iTunes/iTunes\ Library.xml (all assuming the file permissions we are ignoring for now, are set appropriately of course).

So — now that we understand that we can have absolute or relative paths, we are finally ready to start navigating the file system by changing our current directory.

The command to do this is cd (change directory).

Firstly, if you ever get lost and you want to get straight back to your home directory, just run the cd command with no arguments and it will take you home!

Generally, though we want to use the cd command to navigate to a particular folder, to do that, simply use either the absolute or relative path to the folder you want to navigate to as the only argument to the cd command and assuming the path you entered is free of typos, off you’ll go!

Finally, for this instalment, I just want to mention one other nice trick the cd command has up its sleeve, it has a (very short) memory.

If you type cd - you will go back to wherever you were before you last used cd.

As an example, let’s say you spent ages navigating a very complex file system and are now 20 folders deep.

You’ve forgotten how you got there, but you’ve finally found that obscure system file you need to edit to make some app do some non-standard thing.

Then, you make a boo-boo, and you accidentally type just cd on its own, and all of a sudden, you are back in your home folder.

Don’t panic, you won’t have to find that complicated path again, just type cd - and you’ll be right back where you were before you rubber-fingered the cd command!

That’s where we’ll leave things for this instalment. We now understand the structure of our file systems and how to navigate around them. Next time we’ll dive head-long into these file permissions we’ve been ignoring for the last two instalments.

For any Windows users out there, the DOS equivalents are as follows:

-

instead of

pwd, usecdwith no arguments -

instead of

ls, usedir(though it has way less cool options) -

cdiscd, though again, it has way fewer cool options

TTT Part 5 of n — File Permissions

In this instalment, it’s time to make a start on one of the most important Unix/Linux concepts, file permissions. This can get quite confusing, but it’s impossible to overstate the importance of understanding how to read and set permissions on files and folders. To keep things manageable, I’m splitting understanding and altering permissions into two separate instalments.

Linux and Unix (and hence OS X) all share a common file permissions system, but while they share the same common core, they do each add their own more advanced permissions systems on top of that common core. In this first instalment, we’re only going to look at the common core, so everything in this instalment applies equally to Linux, Unix, and OS X. In future instalments, we’ll take a brief look at the extra file information and permissions OS X associates with files, but we won’t be looking at the Linux side of things, where more granular permissions are provided through kernel extensions like SELinux.

Files and permissions

Let’s start with some context. Just like every command shell has a present working directory, every process on a Linux/Unix system is also owned by a user, including shell processes. So, when you execute a command in a command shell, that process has a file system location associated with it and a username. By default your shell will be running as the user you logged into your computer as, though you can become a different user if and when you need to (more on that in future instalments). You can see which user you are running as with the very intuitive command:

whoamiSecondly, users on Unix/Linux systems can be members of one or more groups.

On OS X there are a number of system groups to which your user account may belong, including one called staff to which all admin users belong.

You can see what groups you belong to with the command:

groups(You can even see the groups any username belongs to by adding the username as an argument.)

On older versions of OS X creating your own custom groups was hard.

Thankfully Apple has addressed this shortcoming in more recent versions of the OS, and you can now create and manage your own custom groups in the Users & Groups preference pane (click the + button and choose group as the user type, then use the radio buttons to add or remove people from the group).

Unix/Linux file systems like EXT and HFS+ store metadata about each file and folder as part of that file or folder’s entry in the file system. Some of that metadata is purely informational, things like the date the file was created, and the date it was last modified, but that metadata also includes ownership information and a so-called Unix File Permission Mask.

There are two pieces of ownership information stored about every file and folder: a UID, and a GID. What this means is that every file and folder belongs to one user and one group.

In the standard Linux/Unix file permissions model there are only three permissions that can be granted on a file or folder:

-

Read (

r): if set on a file it means the contents of the file can be read. If set on a folder it means the contents of the files and folders contained within the folder can be read, assuming the permissions masks further down the filesystem tree also allow that. If you are trying to access a file, and read permission is blocked at even one point along the absolute path to the file, access will be denied. -

Write (

w): if set on a file it means the contents can be altered, or the file deleted. If set on a folder it means new files or folders can be created within the folder. -

Execute (

x): if set on a file it means the file can be run. The OS will refuse to run any file, be it a script or a binary executable, if the user does not have execute permission. When set on a folder, execute permission controls whether or not the user has the right to list the contents of a directory.

All permutations of these three permissions are possible on any file, even if some of them are counter-intuitive and rarely needed.

The Unix file Permission Mask ties all these concepts together.

The combination of the context of the executing process and the metadata in a file or folder determines the permissions that apply.

You can use the ls -l command to see the ownership information and file permission mask associated with any file or folder.

The hard part is interpreting the meaning of the file permission mask.

On standard Unix/Linux systems, this mask contains ten characters, though on OS X it can contain an optional 11th or even 12th character appended to the end of the mask (we’ll be ignoring these for this instalment).

The first character specifies the ‘type’ of the file:

-

-signifies a regular file -

dsignifies a directory (i.e. a folder) -

lsignifies a symbolic link (more on these in a later instalment) -

bcpandsare also valid file types, but they are used to represent things like block devices and sockets rather than ‘normal’ files, and we’ll be ignoring them in this series.

The remaining nine characters represent three sets of read, write, and execute permissions (rwx), specified in that order.

If a permission is present then it is represented by an r, w, or x, and if it’s not present, it’s represented by a -.

The first group of three permission characters are the permissions granted to the user who owns the file, the second three are the permissions granted to all users who are members of the group that owns the file, and the last three are the permissions granted to everyone, regardless of username or group membership.

To figure out what permissions you have on a file you need to know the following things:

-

your username

-

what groups you belong to

-

what user the file or folder belongs to

-

what group the file or folder belongs to

-

the file or folder’s permission mask

When you try to read the contents of a file, your OS will figure out whether or not to grant you that access using the following algorithm:

-

is the user trying to read the file the owner of the file? If so, check if the owner is granted read permission, if yes, allow to read, if no, continue.

-

is the user trying to read the file a member of the group that owns the file? If so, check if the group is granted read permission, if yes, allow read, if no, continue.

-

check the global read permission, and allow or deny access as specified.

Write and execute permissions are processed in exactly the same way.

When you see the output of ls -l, you need to mentally follow the same algorithm to figure out whether or not you have a given permission on a given file or folder.

The three columns to look at are the mask, the file owner, and the file group.

We’ll stop here for now.

In the next instalment, we will explain the meaning of the + and @ characters which can show up at the end of a file permission masks on OS X, and we’ll look at the commands for altering the permissions on a file or folder.

TTT Part 6 of n — More File Permissions

In the previous instalment of this series, we had a look at how standard Unix File Permissions worked. We looked at how to understand the permissions on existing files and folders, but not at how to change them. We also mentioned that the standard Unix file permissions are now only a subset of the file permissions on OS X and Linux (OS X also supports file ACLs, and Linux has SELinux as an optional extra layer of security).

In this instalment, we’ll start by biting the bullet and dive into how to alter standard Unix File permissions. This could well turn out to be the most difficult segment in this entire series, regardless of how big 'n' gets, but it is very important, so if you have trouble with it, please don’t give up. After we do all that hard work we’ll end with a simpler topic, reading OS X file ACLs, and OS X extended file attributes. We’ll only be looking at how to read these attributes though, not how to alter them.

As a reminder, last time we learned that every file and folder in a Unix/Linux file system has three pieces of metadata associated with it that control the standard Unix file permissions that apply to that file or folder. Files have an owner (a user), a group, and a Unix File Permission Mask associated with them, and all three of these pieces of information can be displayed with ls -l.

We’ll be altering each of these three pieces of metadata in this instalment.

Altering Unix File Permissions — Setting the File Ownership

The command to change the user that owns one or more files or folders is chown (change owner).

The command takes a minimum of two arguments, the username to change the ownership to, and one or more files or folders to modify.

E.g.:

chown bart myFile.txtThe command can also optionally take a -R flag to indicate that the changes should be applied ‘recursively’, that is that if the ownership of a folder is changed, the ownership of all files and folders contained within that folder should also be changed.

The chown command is very picky about the placement of the flag though, it MUST come before any other arguments E.g.:

chown -R bart myFolderSimilarly, the command to change the group that a file belongs to is chgrp (change group). It behaves in the same way as chown, and also supports the -R flag to recursively change the group.

E.g.:

chgrp -R staff myFolderFinally, if you want to change both user and group ownership of files or folders at the same time, the chown command provides a handy shortcut. Instead of passing just a username as the first argument, you can pass a username and group name pair separated by a :, so the previous two examples can be rolled into the one example below:

chown -R bart:staff myFolderAltering Unix File Permissions — Setting the Permission Mask

The command to alter the permission mask, or file mode, is chmod (change mode).

In many ways it’s similar to the chown and chgrp commands. It takes the same basic form, and supports the -R flag, however, the formatting of the first argument — the permission you want to set — can be very confusing.

The command actually supports two entirely different approaches to setting the permissions. I find both of them equally obtuse, and my advice to people is to pick one and stick with it. Long ago I chose the numeric approach to setting file permissions, so that’s the approach we’ll use here.

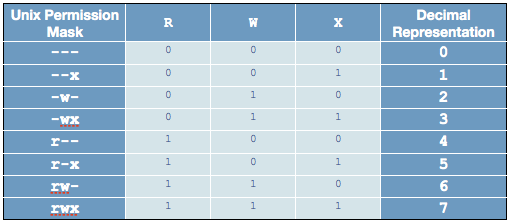

This approach is based on treating the three permissions, read, write, and execute as a three-digit binary number, if you have read permission, the first digit is a 1, if not, it’s a 0, and the same for the write and execute permissions.

So, the permissions rwx would be represented by the binary number 111, the permissions r-x by 101, and r-- by 100.

Since there are three sets of rwx permissions (user, group, everyone), a full Unix file permission mask is defined by three three-digit binary numbers.

Unfortunately, the chmod command doesn’t take the binary numbers in binary form, it expects you to convert them to decimal [1] first, and pass it the three sets of permissions as three digits.

This sounds hard, but with a little practice, it’ll soon become second-nature.

The key to reading off the permissions is this table:

Rather than trying to memorise the table itself, you should try to learn the process for creating it instead.

The lighter coloured cells in the centre of the table are the important ones to be able to re-create on demand.

They are not random, they are a standard binary to decimal conversion table, and you should notice that the three columns have a distinct pattern. The right-most column alternates from 0 to 1 as you go down, the column second from the right has two 0s, then two 1s, then two 0s etc, and finally the third column from the right has four 0s, then four 1s.

If you wanted to convert a 4 digit binary number to decimal you would add a fourth column that has 8 0s then 1s, if you wanted to convert a 5-bit binary number you’d add yet another column where it’s eight 0s then eight 1s, and so on — each column you go to the left doubles the number of 0s and 1s before repeating.

If you can reproduce this table on demand you’ll have learned two things — how to do Unix file permissions, and how to convert any arbitrary binary number to decimal (though there are better ways if the binary number has many digits).

Even if you don’t want to learn how to create the table, you’ll probably still be fine if you remember just the most common permissions:

-

4= read-only -

5= read and execute -

6= read and write -

7= full access

If you run a website, for example, regular files like images or HTML pages and images should have permissions 644 (rw-r--r--: you get read and write, everyone gets read). Executable files and folders should have 755 (rwxr-xr-x: you get full permission, everyone can list the folder contents and read the files within).

Let’s end with a few examples. If you want to alter a file you own so that you have read, write and execute permission, but no one else can access the file in any way you would use the command:

chmod 700 myFile.txtIf the file should not be executable even by you, then you would use:

chmod 600 myFile.txtClearly, this is not intuitive, and it’s understandably very confusing to most people at first. Everyone needs to go over this a few times before it sinks in, so if it doesn’t make sense straight away, you’re not alone. Do please keep at it though, this is very important stuff.

Reading OS X File ACLs

We said last time that on OS X, a + at the end of a file permission mask signifies that the file has ACLs (access control lists) associated with it.

These ACLs allow more granular permissions to be applied to files on top of the standard Unix File Permissions.

If either the ACLs OR the standard Unix permissions deny you the access you are requesting, OS X will block you.

You can read the ACLs associated with files by adding the -le flags to the ls command.

If a file in the folder you are listing the contents of has file ACLs, they will be listed underneath the file, one ACL per line, and indented relative to the files in the list.

Each ACL associated with a file is numbered, and the numbering starts from 0.

The ACLs read as quite Englishy, so you should be able to figure out what they mean just by looking at them. As an example, let’s have a look at the extended permissions on OS X home directories:

bart-imac:~ bart$ ls -le /Users

total 0

drwxrwxrwt 10 root wheel 340 22 Feb 21:42 Shared

drwxr-xr-x+ 12 admin staff 408 26 Dec 2011 admin

0: group:everyone deny delete

drwxr-xr-x+ 53 bart staff 1802 13 Jul 14:35 bart

0: group:everyone deny delete

bart-imac:~ bart$By default, all OS X home folders are in the folder /Users, which is the folder the above commands lists the contents of.

You can see here that my home folder (bart) has one or more file ACLs associated with it because it has a + at the end of the permissions mask.

On the lines below you can see that there is only one ACL associated with my home folder and that it’s numbered 0.

The contents of the ACL are:

group:everyone deny deleteAs you might expect, this means that the group everyone is denied permission to delete my home folder.

Everyone includes me, so while the Unix file permissions (rwxr-xr-x) give me full control over my home folder, the ACL stops me deleting it.

The same is true of the standard folders within my account like Documents, Downloads, Library, Movies, Music, etc..

If you’re interested in learning to add ACLs to files or folders, you might find this link helpful: www.techrepublic.com/blog/mac/…

Reading OS X Extended File Attributes

In the last instalment, we mentioned that all files in a Linux/Unix file system have metadata associated with them such as their creation date, last modified date, and their ownership and file permission information. OS X allows arbitrary extra metadata to be added to any file. This metadata can be used by applications or the OS when interacting with the file.

For example, when you give a file a colour label, that label is stored in an extended attribute. If you give a file or folder a custom Finder icon, that gets stored in an extended attribute (this is how DropBox.app makes your DropBox folder look different even though it’s a regular folder.) Similarly, spotlight comments are stored in an extended attribute, and third-party tagging apps also use extended attributes to store the tags you associate with a given file (presumably OS X Mavericks will adopt the same approach for the new standard file tagging system it will introduce to OS X).

Extended attributes take the form of name-value-pairs.

The name, or key, is usually quite long to prevent collisions between applications, and, like plist files, is usually named in reverse-DNS order.

E.g., all extended attributes set by Apple have names that start with com.apple, which is the reverse of Apple’s domain name, apple.com.

So, if I were to write an OS X app that used extended file attributes, the correct thing for me to do would be for me to prefix all my extended attribute names with ie.bartb, and if Allison were to do the same she should prefix hers with com.podfeet.

(Note that this is a great way to avoid name-space collisions since every domain only has one owner.

This approach is used in many places, including Java package naming.)

The values associated with the keys are stored as strings, with complex data and binary data stored as 64bit encoded (i.e.

HEX) strings.

This means the contents of many extended attributes is not easily human-readable.

Any file that has extended attributes will have an @ symbol appended to its Unix file permission mask in the output of ls -l.

To see the list of the names/keys for the extended attributes belonging to a file you can use ls -l@.

You can’t use ls to see the actual contents of the extended attributes though, only to get their names.

To see the names and values of all extended attributes on one or more files use:

xattr -l [file list]The nice thing about the -l flag is that if the value stored in an extended attribute looks like it’s a base 64 encoded HEX string it automatically does a conversion to ASCII for you and displays the ASCII value next to the HEX value.

Apple uses extended attributes to track where files have been downloaded from, by what app, and if they are executable, and whether or not you have dismissed the warning you get the first time you run a downloaded file.

Because of this, every file in your Downloads folder will contain extended attributes, so ~/Downloads is a great place to experiment with xattr.

As an example, I downloaded the latest version of the XKpasswd library from my website (xkpasswd-v0.2.1.zip).

I can now use xattr to see all the extended attributes OS X added to that file like so:

bart-imac:~ bart$ xattr -l ~/Downloads/xkpasswd-v0.2.1.zip

com.apple.metadata:kMDItemDownloadedDate:

00000000 62 70 6C 69 73 74 30 30 A1 01 33 41 B7 91 BF D6 |bplist00..3A....|

00000010 37 DB A1 08 0A 00 00 00 00 00 00 01 01 00 00 00 |7...............|

00000020 00 00 00 00 02 00 00 00 00 00 00 00 00 00 00 00 |................|

00000030 00 00 00 00 13 |.....|

00000035

com.apple.metadata:kMDItemWhereFroms:

00000000 62 70 6C 69 73 74 30 30 A2 01 02 5F 10 39 68 74 |bplist00..._.9ht|

00000010 74 70 3A 2F 2F 77 77 77 2E 62 61 72 74 62 75 73 |tp://www.bartbus|

00000020 73 63 68 6F 74 73 2E 69 65 2F 64 6F 77 6E 6C 6F |schots.ie/downlo|

00000030 61 64 73 2F 78 6B 70 61 73 73 77 64 2D 76 30 2E |ads/xkpasswd-v0.|

00000040 32 2E 31 2E 7A 69 70 5F 10 2E 68 74 74 70 3A 2F |2.1.zip_..http:/|

00000050 2F 77 77 77 2E 62 61 72 74 62 75 73 73 63 68 6F |/www.bartbusscho|

00000060 74 73 2E 69 65 2F 62 6C 6F 67 2F 3F 70 61 67 65 |ts.ie/blog/?page|

00000070 5F 69 64 3D 32 31 33 37 08 0B 47 00 00 00 00 00 |_id=2137..G.....|

00000080 00 01 01 00 00 00 00 00 00 00 03 00 00 00 00 00 |................|

00000090 00 00 00 00 00 00 00 00 00 00 78 |..........x|

0000009b

com.apple.quarantine: 0002;51e18856;Safari;6425B1FC-1E4C-4DB1-BD0D-6161A2DE0593

bart-imac:~ bart$You can see that OS X has added three extended attributes to the file, com.apple.metadata:kMDItemDownloadedDate, com.apple.metadata:kMDItemWhereFroms and com.apple.quarantine.

All three of these attributes are base 64 encoded HEX.

The HEX representation of the data looks meaningless to us humans of course, but OS X understands what it all means, and the xattr command is nice enough to display the ASCII next to the HEX for us.

In the case of the download date, it’s encoded in such a way that even the ASCII representation of the data is of no use to us, but we can read the URL from the second extended attribute, and we can see that Safari didn’t just save the URL of the file (https://www.bartbusschots.ie/downloads/xkpasswd-v0.2.1.zip), but also the URL of the page we were on when we clicked to download the file (https://www.bartbusschots.ie/blog/?page_id=2137).

Finally, the quarantine information is mostly meaningless to humans, except that we can clearly see that the file was downloaded by Safari.

The xattr command can also be used to add, edit, or remove extended attributes from a file, but we won’t be going into that here.

Wrapup

That’s where we’ll leave things for this instalment. Hopefully, you can now read all the metadata and security permissions associated with files and folders in OS X, and you can alter the Unix file permissions on files and folders.

We’ve almost covered all the basics when it comes to dealing with files in the Terminal now. We’ll finish up with files next time when we look at how to copy, move, delete, and create files from the Terminal.

TTT Part 7 of n — Managing Files

So far in this series we’ve focused mostly on the file system, looking at the details of file systems, how to navigate them, and at file permissions and metadata. We’re almost ready to move on and start looking at how processes work in Unix/Linux/OS X, but we have a few more file-related commands to look at before we do.

In this instalment, we’ll be looking at how to manipulate the file system. In other words, how to create files and folders, how to copy them, how to move them, how to rename them, and finally how to delete them.

Creating Folders & Files

This is one of those topics that I think is best taught through example, so let’s start by opening a Terminal window and navigating to our Documents folder:

cd ~/DocumentsWe’ll then create a folder called TtT6n in our Documents folder with the command:

mkdir TtT6nAs you can see, directories/folders are created using the mkdir (make directory) command.

When used normally the command can only create folders within existing folders.

A handy flag to know is the -p (for path) flag which will instruct mkdir to create all parts of a path that do not yet exist in one go, e.g.:

mkdir -p TtT6n/topLevelFolder/secondLevelFolderSince the TtT6n folder already existed the command will have no effect on it, however, within that folder it will first create a folder called topLevelFolder, and then within that folder, it will create a folder called secondLevelFolder.

At this stage let’s move into the TtT6n folder from where we’ll execute the remainder of our examples:

cd TtT6nWe can now use the -R (for recursive) flag for ls to verify that the mkdir -p command did what we expect it to. I like to use the -F flag we met before with -R so that folder names have a trailing / appended:

ls -RFWhen using ls -R the contents of each folder is separated by a blank line, and for folders deeper down than the current folder each listing is prefixed with the relative path to the folder about to be listed followed by a :.

In other words, we are expecting to see just a single entry in the first segment, a folder called topLevelFolder, then we expect to see a blank line followed by the name of the next folder to be listed, which will be the aforementioned topLevelFolder, followed by the listing of its contents, which is also just one folder, this time called secondLevelFolder.

This will then be followed by a header and listing of the contents of secondLevelFolder, which is currently empty.

Let’s now create two empty files in the deepest folder within our test folder (secondLevelFolder).

There are many ways to create a file in Unix/Linux, but one of the simplest is to use the touch command. The main purpose of this command is to change the last edited date of an existing file to the current time, but if you try to touch a file that doesn’t exist, touch creates it for you: